Eduardo D. Sontag

University Distinguished Professor, Electrical and Computer Engineering & Bioengineering, Northeastern University

Affiliate, Mathematics & Chemical Engineering, Northeastern University

Faculty, Laboratory of Systems Pharmacology, Program in Therapeutic Science, Harvard Medical School

Research Affiliate, Laboratory for Information and Decision Systems, MIT

Affiliate, Institute for Quantitative Biomedicine, Rutgers

Short CV:

Eduardo Sontag's major current research interests lie in several areas of control and dynamical systems theory, systems molecular biology, synthetic biology, computational biology, and cancer and immunology

He received his Licenciado degree from the Mathematics Department at the University of Buenos Aires in 1972, and his Ph.D. (Mathematics) under Rudolf E. Kalman at the University of Florida, in 1977. From 1977 to 2017, he was with the Department of Mathematics at Rutgers, The State University of New Jersey, where he was a Distinguished Professor of Mathematics as well as a Member of the Graduate Faculty of the Department of Computer Science and the Graduate Faculty of the Department of Electrical and Computer Engineering, and a Member of the Rutgers Cancer Institute of NJ. In addition, Dr. Sontag served as the head of the undergraduate Biomathematics Interdisciplinary Major, Director of the Center for Quantitative Biology, and Director of Graduate Studies of the Institute for Quantitative Biomedicine. In January 2018, Dr. Sontag was appointed as a University Distinguished Professor in the Department of Electrical and Computer Engineering and the Department of BioEngineering at Northeastern University. In 2019, he was appointed as an affiliate in the NU departments of Mathematics and Chemical Engineering, and since 2021 has been in the Faculty and a member of the Leadership Council of the Institute for Experiential AI at NU. Since 2006, he has been a Research Affiliate at the Laboratory for Information and Decision Systems, MIT, and since 2018 he has been a member of the Faculty in the Program in Therapeutic Science at Harvard Medical School.

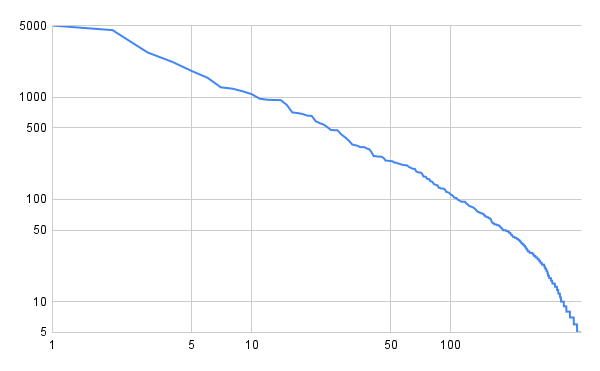

Eduardo Sontag has authored over five hundred research papers and monographs and book chapters in the above areas with over ~61K citations and an h-index of 106. He is in the Editorial Board of several journals, including: Cell Systems, IET Proceedings Systems Biology, Synthetic and Systems Biology, International Journal of Biological Sciences, and Journal of Computer and Systems Sciences, and is a former Board member of SIAM Review, IEEE Transactions in Automatic Control, Systems and Control Letters, Dynamics and Control, Neurocomputing, Neural Networks, Neural Computing Surveys, Control-Theory and Advanced Technology, Nonlinear Analysis: Hybrid Systems, and Control, Optimization and the Calculus of Variations. In addition, he is a co-founder and co-Managing Editor of the Springer journal MCSS (Mathematics of Control, Signals, and Systems).

He is a Fellow of various professional societies: IEEE, AMS, SIAM, and IFAC, and is also a member of SMB and BMES. He has been Program Director and Vice-Chair of the Activity Group in Control and Systems Theory of SIAM, and member of several committees at SIAM and the AMS, including Chair of the Committee on Human Rights of Mathematicians of the latter in 1981-1982. He was awarded the Reid Prize in Mathematics in 2001, the 2002 Hendrik W. Bode Lecture Prize and the 2011 Control Systems Field Award from the IEEE, as well as the the 2022 Bellman Control Heritage Award (click on name for text and video of award speech) from the American Control Council (AIAA/AIChe/Informs/ASCE/ASME/IEEE/ISA/SIAM). He was also awarded the 2002 Board of Trustees Award for Excellence in Research from Rutgers, and the 2005 Teacher/Scholar Award from Rutgers.

Itemized main honors:

Selected Public Research Rankings:

- Publications (including most preprints and reprints and searchable by content, year, coauthors, or keywords/categories)

- Google Scholar ~60K citations and h-index=105 as of 07/2023 [ Printout/spreadsheet as of 12/2019]

- ResearchGate

- My ORCID ID

- Mathematical Control Theory book (online copy freely available)

- Notes on Systems Biology (online only)

- MCSS journal

- Suggestions (for students and collaborators) when writing papers

- R.E. Kalman (my thesis adviser; article in Notices of AMS, Nat.Med.Sci., 2009)

- "SontagFest 2011" photos slideshow (video, mp4 format, 22 minutes)

- "SontagFest 2011" photos (zip file with photos)

-

Data sources:

Thomas Alva Edison (Edison Laboratory, Menlo Park)(Physics Tree)

|

| (trained research scientist) <-- Edison was not formally a professor

|

Arthur Edwin Kennelly (Massachusetts Institute of Technology)(Physics Tree)

|

| (trained grad student)

|

Vannevar Bush (Carnegie Institution of Washington)(Computer Science Tree)

|

| (trained grad student)

|

John B. Russell (Columbia University)(Computer Science Tree)

|

| (trained grad student)

|

John Ralph Ragazzini (Columbia University)(Computer Science Tree)

|

| (trained grad student)

|

Rudolf Emil Kalman (Columbia University)(Computer Science Tree)

|

| (trained grad student)

|

Eduardo D. Sontag

-

E. Sontag's work spans control and dynamical systems theory, theoretical

computer science, machine learning, cancer and immunology, and molecular,

synthetic, and computational biology.

- Starting with his PhD thesis, Sontag developed the foundations of observability, identifiability, and realizations of discrete-time (and later continuous-time) algebraic nonlinear systems, based in the idea of studying the dual adjoint dynamics on "system observables". This work was the subject of his Invited Address to the 1994 International Congress of Mathematicians.

- Following on the work of Kalman and coauthors, Sontag made some of the most significant advances to linear systems over rings, in which the entries of the systems matrices (A,B,C) belong to rings of operators (useful in studying the stabilization of delay differential systems), integers (no-roundoff), or polynomial functions of parameters (useful in the stabilization of parametrized families of systems). For example his 1982 paper (with Khargonekar) [4a] presented the first state space formulas for stable proper coprime factorizations, required for controller parameterization in the Desoer-Youla-Vidyasagar sense, framed naturally in the language of systems over rings.

- With his Rutgers colleague Sussmann, in a 1980 CDC paper Sontag showed that it is not possible to stabilize every controllable system by continuous feedback. He thus demonstrated that aiming for results that replicate those in linear control theory might produce feedback laws without noise-robustness. But they also showed that time-periodic feedback stabilization is possible, at least in dimension one, which Coron subsequently greatly extended. Sontag then developed a whole line of research on stabilization by discontinuous feedback, in which a particularly remarkable achievement is the TAC paper that showed that every (asymptotically) controllable system can be stabilized by discontinuous feedback.

- Another direction largely initiated by Sontag and Sussmann was stabilization of linear systems by bounded feedback; a first paper announced the problem's full solution but with a construction that was not practically implementable. Teel improved their work with a brillant approach with nested saturations, for the chain of integrators. In turn, Sontag and Sussmann extended that construction to the general case, and Sontag and students went on to prove additional robustness and ISS properties of these designs.

- Arguably, the best-known contribution of Sontag's control theory work is the notion of input-to-state stability (ISS). This paradigm has been employed in tens of thousands of papers by other authors and dozens of books, in both further theoretical developments and applications. Prior to the 1989 introduction of ISS, two main streams of nonlinear systems theory remained disjoint: while classical Lyapunov stability theory dealt with state space models, with a very limited capability to incorporate disturbances, Sandberg-Zames input-output theory --more suitable to engineering needs-- was unable to benefit from rich insights gained by Lyapunov theory. Thus, responses to inputs and responses to initial conditions existed in separate control theory "universes". The new notion of ISS seamlessly integrated Lyapunov and I/O theory. The first first paper was followed with at least a decade of further theoretical advances, and in the hands of control design-oriented researchers ISS became a tool for synthesis of control algorithms robust to disturbances and unmodeled dynamics.

- Probably the next best-known contribution of Sontag's control work wwre control Lyapunov functions (cLf's). Non-smooth cLf's were introduced by him in 1981 (Arstein soon after developed the idea of smooth cLf's), as well as the "universal formula" for stabilization. These notion, together with ISS and its derivatives, allowed Lyapunov functions to be applied as a control synthesis tool, and not merely an analysis tool, through innovations (by other authors) such as backstepping, then forwarding, and inverse optimal control, "safe control", control barrier functions, and "safeguards", which have been key to areas ranging from stable biped walking to automated and driverless cars.

- Sontag was also a pioneer in the now-popular field of hybrid systems. His 1980 paper on piecewise linear systems showed their power as controllers, combining continuous variables and discrete mathematics and computer science ideas towards the development of a comprehensive approach to the regulation of nonlinear systems.

- In the late 1980s, Sontag started the study of computational complexity in nonlinear control. He proved that deciding nonlinear controllability for bilinear systems is NP-hard, a fundamental limitation not expected at that time, thus establishing that the search for efficiently testable necessary and sufficient conditions (an area of great activity at the time) could not possibly succeed.

- Sontag's interest in neural networks (already evident in an Artificial Intelligence book that he published as an undergraduate) culminated in the 1990s in his examination of the foundations of feedforward and recurrent neural networks and more generally computational learning theory; he provided the first results on the sample complexity and VC dimension of analog neural networks. A remarkable paper showed that what are now called "deep neural networks" are necessary to stabilize non-holonomic systems, which was surprising given the focus on single hidden layer architectures for control at the time. Going further, he developed a theory of analog computing (with his student Siegelmann), showing that even analog computers would not be able to solve certain computational problems unless a condition similar to "P=NP" would hold. Many of these papers were accepted for presentation at the most selective computer science conferences (FOCS, STOC, COLT, NIPS).

- Since around the year 2000, Sontag has turned much of his research to questions inspired by systems biology. Feedback is as central to living systems as it is in engineering, from homeostatic mechanisms for temperature, pressure, or chemical levels, to the delicate interactions between infections, tumors, and the immune system. In contrast to engineering systems governed by physical laws understood at least a century ago, the feedback models in biology contain substantial uncertainty and noise -- the very things that living organisms overcome to survive. Sontag's effort has focused on understanding what is special about biological control systems. The analysis of biological problems often leads to the discovery of fundamental new concepts in control theory, which may be subsequently applied in other fields.

- In a 2001 paper, Sontag showed how stability of a popular model of immune cell activation could be established by using chemical reaction network (CRN) theory, in the process extending certain stability proofs of CNR theory from local to global and quantifying robustness properties. In a line of very successful follow-ups with Angeli and others, he vastly expanded the knowledge of dynamics of biochemical networks, which has been followed up by the efforts of a large community.

- Also with Angeli, and again motivated by biological signaling networks, Sontag introduced in a 2004 paper the idea of monotone systems with inputs, in the process advancing classical results by Hirsch, Smith, Smale, and others in mathematics. The introduction of inputs (and outputs) made possible the analysis of large systems through decomposition into smaller monotone subsystems, for example, through new small-gain theorems (different than those for ISS), an approach that would have been impossible if considering only classical isolated dynamical systems. This work led to an explosion of interest by the control community in monotone systems and systems decomposable into monotone systems, with applications much beyond the original biological motivations.

- Much of Sontag's recent research has focused on the interactions between therapies, immune system components, and tumors and/or infections. Resistance is often viewed as the result of Darwinian selection of either pre-existing (standing variation) or during-treatment (de novo) random genetic or epigenetic modifications. However, experimental evidence suggests that the progression to resistance need not occur randomly, but instead may be induced by the therapeutic agent itself. This "Lamarckian" process of resistance induction can be a result of genetic changes, or can occur through epigenetic alterations that cause otherwise drug-sensitive cancer cells to undergo "phenotype switching". This relatively novel notion of resistance further complicates the already challenging task of designing treatment protocols that minimize the risk of evolving resistance. In an effort to better understand treatment resistance, Sontag developed in (with Greene and Gevertz) a mathematical modeling framework that incorporates both random and drug-induced resistance. the model demonstrates that the ability (or lack thereof) of a drug to induce resistance can result in qualitatively different responses to the same drug dose and delivery schedule. This model has already had an impact, forming the basis of experimental work such as the DNA barcoding work at Brock’s lab at UT.

- Sontag also considered a related question, that of systemic resistance in the context of metronomic chemotherapy. In work with Waxman's lab at BU, he developed a mathematical model to elucidate the underlying complex interactions between tumor growth, immune system activation, and therapy-mediated immunogenic cell death. Our model is conceptually simple, yet it provides a surprisingly excellent fit to empirical data obtained from a GL261 mouse glioma model treated with cyclophosphamide on a metronomic schedule. Strikingly, a fixed set of parameters, not adjusted for individuals nor for drug schedule, excellently recapitulates experimental data across various drug regimens. Additionally, the model predicts peak immune activation times, rediscovering experimental data that had not been used in parameter fitting or in model construction. The validated model was then used to predict expected tumor-immune dynamics for novel drug administration schedules, and it suggested that immunostimulatory and immunosuppressive intermediates are responsible for the observed phenomena of resistance and immune cell recruitment, and thus for variation of responses with respect to different schedules of drug administration.

- In work with Zloza's lab at Rutgers, a different type of resistance was studied; this work provided a novel mechanistic model of interactions between a non-oncogenic viral lung infection (A/H1N1/PR8), distal B16-F10 skin melanoma, T cells, and checkpoint inhibition therapy, which might explain the increased tumor growth observed in the Zloza's lab.

- Since the early 1990s, many authors have independently suggested that self/nonself recognition by the immune system might be modulated by the rates of change of antigen challenges, in addition to the antigen identity. In recent work, Sontag introduced a very simple mathematical model which predicts that exponentially increasing antigen stimulation (tumor growth, acute infections, doubling vaccine dose in successive boosters) will enhance immune response, and which recovers, in particular, behaviors observed 30-40 years ago (“two-zone tumor tolerance” phenomenon).

- The advent of the field of (molecular, systems) "synthetic biology" at the turn of the 21st Century was motivated by two distinct, but mutually reinforcing, goals. A first goal is to design novel –or re-engineer existing– molecular biological systems, extending or modifying the behavior of organisms, and to control them to perform new tasks. Through the de novo construction of simple elements and circuits, the field aims to develop a systematic approach to obtaining new cell behaviors in a predictable and reliable fashion. Applications include targeted drug delivery, immunotherapy (e.g., redesign of cytotoxic T cells to fight tumors), renewable energy (e.g., bio-fuels through ethanol-producing bacteria), re-engineering bacterial metabolism for waste recycling, bio-sensing (e.g., detecting environmental pathogens or toxins), tissue homeostasis (e.g., designing self-differentiating β cells in type 1 diabetes treatments), and computing applications (e.g., molecular computing). A second, and no less important, goal is to improve the quantitative and qualitative understanding of basic natural phenomena. Experimental validation in theoretical biology is hampered by the practical difficulties that one faces when attempting to test the validity of models, due to interlocking regulatory loops in tightly controlled naturally occurring cellular systems. A very useful approach to the testing of (mathematical) models of a biological system is to design and construct synthetic versions of the hypothesized system . Discrepancies between expected behavior and observed behavior serve to highlight either research issues that need more studying, or knowledge gaps and inaccurate assumptions in models. Synthetic, as opposed to naturally occurring, networks have the advantage that they can be designed specifically to test hypotheses, thus affording a "clean playground" in which to test models. Systems can be built with exactly the components that are believed to be relevant to a given biological signal processing and/or control function. In this fashion, the combinatorial effects of multiple feedforward and feedback loops, or the steps involved in complex cellular responses to stimuli, can be deconstructed and analyzed. The basic knowledge gained from synthetic constructs thus helps advance our understanding of natural complex systems. Sontag's lab has contributed to various areas of synthetic biology, ranging from the theoretical foundations of modular interconnections ("retroactivity" and "resource competition" papers, in collaboration with Del Vecchio's lab at MIT) to the design of distributed multi-cell computations (collaboration with Voigt's lab at MIT) to the analysis and design of transcriptional gene regulatory networks, protease-based enzymatic biosensors (with Khare's lab at Rutgers) and cell-free biological systems (with Noireaux's lab at Minnesota).

- The different areas of Sontag's systems biology work share an emphasis on fundamental principles of signal processing, feedback, and control, and benefit from the rich set of conceptual and practical tools developed from his expertise in feedback control theory and other areas of applied mathematics, computer science, and engineering. An example is the work on network inference of biological pathways. The web of interactions between genes, proteins, metabolites, and small molecules gives rise to complex circuits that enable cells to process information, store memories, and respond to external signals, and the reverse engineering problem in aims to unravel these interactions, employing experimental data such as changes in concentrations of active proteins, mRNA levels, and so forth. Steady-state "Modular Response Analysis" (delevloped with Kholodenko in the early 2000s) is by now widely applied, and it allows one to discover fundamental limitations of perturbation-based approaches to network reconstruction (work with Gunewardena's lab at Harvard Medical School). Complementing this, the transient behavior of systems subject to external stimuli can provide deep insights into network structure (work with Rahi's lab at EPFL), especially when coupled to log-sensing and other input invariances ("fold change detection" work with Alon at the Weizmann)

His work in nonlinear control theory led in the late 1980s to mid 1990s to the introduction of the concept of input-to-state stability (ISS), a stability theory notion for nonlinear systems, and various related variants (iISS, etc.), as well as in 1981 the first formulation of control-Lyapunov functions, and later a ``universal formula'' for smooth stabilization.

At the time, he also developed tools for stabilization of linear systems under actuator saturation.

In the 1980s, he pioneered techniques for the analysis of nonlinear systems based on commutative algebra and algebraic geometry, for observability and minimal realizations.

In the early to mid 1980s, he developed notions of computational complexity for control systems, and published one of the first papers on hybrid (piecewise linear) systems.

In the late 1990s, Sontag turned to a focus on discontinuous stabilization, a topic that had originated in joint work with Sussmann in 1980.

Other work in the 1980s to early 1990s dealt with the foundations of observation spaces, identifiability, and input/output equations for nonlinear behaviors, and the finalizing of a major textbook in Mathematical Control Theory.

Sontag has a long-standing interest in biology and neuroscience, starting with a book on AI that he authored while an undergraduate.

In the 1990s, he established basic results on representability, identifiability, and super-Turing computability by neural networks, as well as ``PAC learning'' sample estimates for dynamical systems, rates of approximation in function approximation, and a foundational result on neural-network feedback control (``two hidden layers are necessary'').

Since the year 2000, he has increasingly been motivated by molecular biology, and has published in systems biology (and more pure biology) journals on the topics of bacterial chemotasis, cell cycle, immune recognition, interactions between cancers and infections, chemotherapy-induced resistance, metastasis, epigenetics, ribosome flow models, and tumor heterogeneity.

He has also worked on population models (Lotka-Volterra species competition, epidemiology).

Another recent major direction of work has been in synthetic biology, and specifically the design of genetic and post-translational circuits for gene copy number compensation, rejection of disturbances, and Boolean computation.

More generally in systems biology, he has worked on fundamental mathematical principles of monotone systems, stochastic models, and chemical network theory.

Some specifics:

- Center for Quantitative Biology (formerly, BioMaPS)

- Ph.D. Program in Quantitative BioMedicine

- BioMathematics Interdisciplinary Undergraduate Major

- Workshop on Perspectives and Future Directions in Systems and Control Theory May 23-27, 2011 and article in Control Systems Magazine about it